You’ve finalized your business idea, you’ve secured investment and you’re well on your way to finalizing your new product or service and can’t wait to get it to market. The next step is to create your new website. It can be tempting to jump right in and try to get this over and done with as quickly as possible so you can start showcasing your product or service to the world immediately.

However, if you can resist the urge to rush to go-live and instead, take adequate time during the planning and discovery stage, you are more likely to end up with a better website that will be fit for purpose for all of your stakeholders. As part of this planning, you need to take SEO into consideration at the very start of the project. Your SEO team should play a key role in the planning and discovery process.

SEO is an ongoing process involving different elements including content, PR, authority building and technical considerations – however the backbone of any successful SEO strategy is an “SEO Ready” website. In this article and accompanying checklist, we will outline the key SEO considerations to take into account when it comes to building your new website. Tasks can roughly be categorized into the following groups (although there is some overlap between many of these):

- Crawlability + Indexability

- Site Speed and Performance

- Content

- EEAT

Crawlability + Indexability

Crawling is the process by which search engines discover content on the web. The internet is, fundamentally, just a huge collection of web pages – crawlers find new web pages by following links on pages that they have already discovered.

The next stage in Google’s information retrieval process is indexing. This essentially involves Google analysing the content of the page and storing a copy of that page into it’s index (collection of databases).

The technical underpinnings of a website should be optimised to ensure that your website can be easily crawled by search engines, and your content easily understood and indexed.

To ensure that your website is both crawlable and easily indexed by Google, you should follow these tips:

✅ Password Protect Your Staging Site

The last thing you want is for your staging site to get indexed and outrank your actual site when that goes live. The best way to prevent both users and search engines from gaining access to your development and staging environments is to use HTTP Authentication. You can whitelist IP addresses at your office, and provide access to relevant 3rd parties via username/password combinations.

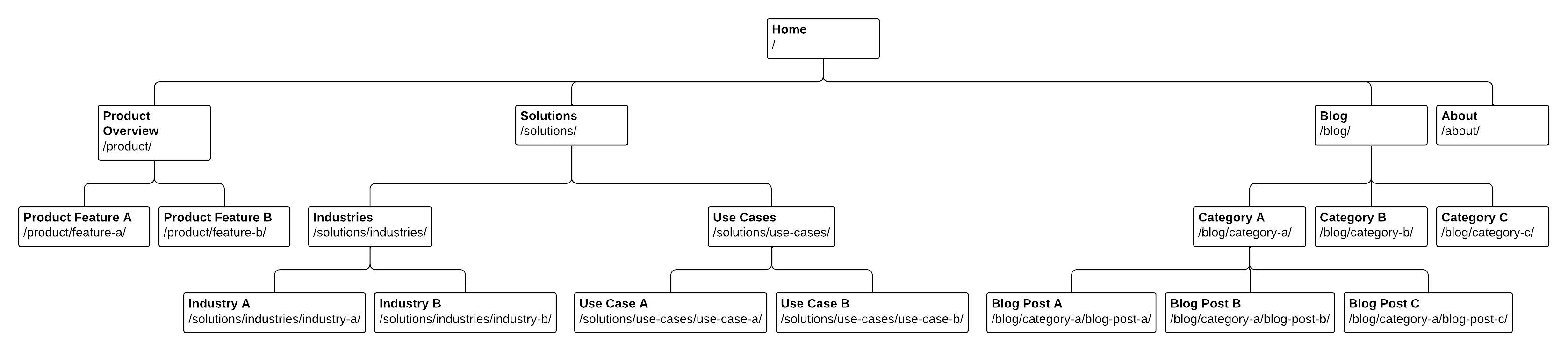

✅ Plan Out A Logical Site Architecture

Googlebot and other search engine crawlers find new content by following links from pages they have already crawled on your site.

You can make it easier for Google to quickly and efficiently find and crawl all of your pages by ensuring that you have a logical site architecture and internal linking structures to match this site structure – ideally this would include evenly spread content categories and sub-categories for key products/services/content categories.

✅ Ensure Logical URL Structures & Short, Concise, Keyword-rich Page Paths (URLs)

Following on from the previous point, you should also make sure that your site has a logical URL structure to match your site architecture.

URL structures should follow a format something like this: /primary-section/sub-category/specific-page-topic. Specifc examples might be:

- /product-overview/product-feature-A

- /blog/blog-category-a/specific-blog-post-A

- /solutions/industries/specific-industry-A

As part of your URL naming conventions, you should include keywords relevant to the page topic in the final part of the page path – in other words if your product is a SAAS BI Platform and the page in question is a use case for the financial service industry, the URL path would be /solutions/industries/financial-services-bi-platform

Including relevant keywords in your page URLs can help Google and other search engines better understand what your pages are about. This, in turn, can help your website rank higher in SERPs.

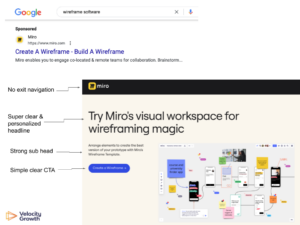

✅ Ensure Intuitive Crawlable (HTML) Navigation With A Clear Primary Menu

You’ve already done the hard part by planning out a logical site architecture and URL structures. Now you should ensure that your site navigation adequately reflects this structure so that users (and crawlers) can browse the site logically and never have to work too hard to find related content.

The benfits of a logical site navigation are two fold:

- It ensures that crawlers can efficiently find all of the pages on your site without working too hard

- It helps to spread link equity (authority) throughout your site

Typically, you should allow users and crawlers to easily navigate within sections and sub-sections by following the rules below:

1. All main hub / category pages should be accessible via primary HTML navigation

2. Secondary HTML navigation within main hub / categories should link to related content (e.g. blog category menu within the blog, product features menu within the product overview page, etc.)

The reason that I recommend building and styling navigation via simple HTML & CSS, is that very often Google can have a hard time finding non – html links.

Generally, Google can only crawl your link if it’s an

<a>HTML element (also known as anchor element) with anhrefattribute. Most links in other formats won’t be parsed and extracted by Google’s crawlers. Google can’t reliably extract URLs from<a>elements that don’t have anhrefattribute or other tags that perform as links because of script events.

Source: Google Search Central

✅ Build Other Relevant Internal Linking Structures to Spread Link Equity

With a small site, well planned out and implemented navigation structures may be enough to ensure that users and crawlers can always find related content.

However, for larger sites, to ensure that crawlers can find all your content, showcase to Google that you are a topical expert (through topical content clusters) and spread link equity throughout your site, you should also include other relevant internal linking structures to inter-link between related content – for example related posts links on blog posts, related case studies links on indsutry pages, related services links on case study pages, etc.

When done thoughtfully and strategically, internal linking can significantly contribute to the overall success of your website’s SEO efforts.

A nice example of a well implemented related posts section can be seen here at the bottom of the blog post template on Safely.com.

✅ Implement HTML Pagination (Or Search-friendly Load More / Infinite scroll) Within Larger Sections

As I’ve mentioned previously, when crawling a site to find pages to index, Google only follows links marked up in HTML with <a href> tags. The Google crawler doesn’t follow buttons (unless marked up with <a href>) and doesn’t trigger JavaScript to update the current page contents.

To make sure Google can crawl and index your paginated content, follow these best practices:

- Link pages sequentially

- Use URLs correctly

- Avoid indexing URLs with filters or alternative sort orders

Read more about these pagination best practices on Google Search Central.

There are ways to build load more / infinite scroll implementations that are SEO friendly but they tend to be trickier than standard HTML pagination – if you’re hellbent on Load More or Infinite Scroll and if you trust your dev team, then by all means go for it. The key is ensuring that Google can actually crawl the content behind the Load More / Infinite Scroll interaction.

You can read Google’s guidelines for search friendly infinite scroll here.

To make sure Google can crawl and index your paginated content, particularly within larger sections like blogs or resource sections, follow these best practices.

✅ Create an XML Sitemap Containing All URLs That Should Be Indexed

An XML sitemap is a file that helps search engines better understand your website by listing all its important pages. XML stands for Extensible Markup Language, a standard format used in various applications on the internet.

XMLsitemaps assist in improved crawling efficacy and faster indexing of your content. They help search engines understand the structure and importance of your content, leading to more accurate indexing and potentially better search engine rankings.

Usually, your CMS will autogenerate an xml sitemap at www.yoursitename.com/sitemap.xml. If not you can create one with a tool like Screaming Frog.

✅ Create Google Search Console profile and submit XML sitemap.

Whilst Google should find your xml sitemap by itself, submitting it to Google Search Console gives Google a helping hand and also gives you valuable insights into how Google interacts with your website. You can monitor crawl stats, see how many pages are indexed, and identify any issues that may be affecting your site’s performance in search.

Submitting your XML sitemap to Google:

Before You Start

Ensure you have a Google Account and that your website is verified with Google Search Console. If your website isn’t already verified, you’ll need to do that first by adding a verification code to your website or using a domain name provider.

Step-by-Step Guide

- Log into Google Search Console: Go to Google Search Console and sign in with your Google account.

- Select Your Website: Once logged in, select the property (website) you want to submit the sitemap for from the property list.

- Find the Sitemaps Section: On the left-hand side, you’ll see a menu. Click on ‘Sitemaps’. The Sitemaps section is where you manage your sitemaps and see any sitemaps that have already been submitted.

- Prepare Your Sitemap: Before submitting, make sure your XML sitemap is ready. It should be live on your website (e.g., https://www.yourwebsite.com/sitemap.xml). Verify that it’s correctly formatted and accessible in a web browser.

- Submit Your Sitemap: In the ‘Add a new sitemap’ section, enter the URL of your sitemap. You only need to include the part of the URL that comes after your domain name. For example, if your sitemap URL is https://www.yourwebsite.com/sitemap.xml, you just enter sitemap.xml.

- Click Submit: After entering the URL, click the ‘Submit’ button. Google will now process your sitemap. This process can take some time, so you might not see results immediately.

- Check for Errors: After submission, stay in the Sitemaps section to monitor the status. Google will show whether the sitemap was successfully processed or if there were any issues you need to address.

✅ Configure Your Robots.txt File To Guide Search Engine Bots

A robots.txt file is a text file used by websites to communicate with web crawlers and other web robots, primarily to provide instructions about which areas of the website should not be processed or scanned by these bots. . It helps search engine bots (such as Googlebot) navigate and crawl your site effectively while also preventing them from accessing certain areas or files that you don’t want to be indexed or crawled.

The file is placed in the root directory (www.mysite.com/robots.txt) and typically specifies which parts of the site should be excluded from crawling through “disallow” rules.

For a staging site for example, you could add the following code to your robots.txt to direct Google not to index any of the site content:

User-agent: * Disallow: /

For a live WordPress site, a basic robots.txt file would look like this:

User-agent: * Disallow: /wp-admin/ Allow: /wp-admin/admin-ajax.php

This tells all bots (“*” represents all bots, you could also create rules for specific bots) that they can crawl all content on the site except the /wp-admin/ folder. However, they are allowed to crawl one file in the /wp-admin/ folder called admin-ajax.php (the reason for this setting is that Google Search Console used to report an error if it wasn’t able to crawl the admin-ajax.php file).

You can also show Google where to find your xml sitemap through the robots.txt file e.g.:

User-agent: * Disallow: /wp-admin/ Allow: /wp-admin/admin-ajax.php Sitemap: https://www.mysite.com/sitemap.xml

✅ Implement Breadcrumb Navigation

Breadcrumbs are a navigational feature used on websites to show the user’s path to their current location in the site’s hierarchy. They are typically displayed as a row of links at the top of a page and look something like “Home > Category > Subcategory > Page.” Breadcrumbs provide a convenient way for users to navigate through the site, allowing them to understand where they are in the context of the site’s structure and easily backtrack to previous sections.

They also help with SEO by:

- defining a clear site structure for search engines

- improving site engagement

- autogenerating a tonne of links with keyword rich anchor texts

Site Speed and Performance

Search engines like Google prioritize user experience, so faster sites are often ranked higher in search results. Additionally, slow-loading websites can frustrate users, leading them to leave the site quickly, which negatively impacts SEO rankings. In essence, optimizing site speed is crucial for both improving user satisfaction and enhancing a website’s visibility and ranking in search engine results. By following these tips, you can ensure that Google rewards your site thanks to it’s speed and performance:

✅ Ensure a Mobile-friendly Design

It’s 2024. Mobile-friendly design is absolutely essential for SEO because it aligns with Google’s mobile-first indexing as well as enhancing user experience, improving page loading speed, and helping you capture mobile search traffic effectively.

✅ Compress Images and Optimize File Sizes

Compressed images and optimized file sizes play a crucial role in SEO by improving page loading speed, enhancing user experience, reducing bounce rates, and facilitating better crawling and indexing by search engines.

✅ Minimized CSS and JavaScript

Minimizing CSS and JavaScript impacts page loading speed and user experience – both of which are key factors considered by search engines when ranking websites.

- Faster Page Load Speed: Minimized CSS and JavaScript files have a smaller size, leading to faster download and processing times. Since page load speed is a ranking factor for search engines like Google, faster loading pages tend to rank higher.

- Improved User Experience: Faster loading times enhance the user experience, especially on mobile devices. A good user experience is crucial for keeping visitors on your site and encouraging engagement which is assumed to be beneficial for SEO.

✅ Utilize Browser Caching

Caching is a fundamental aspect of website optimization that significantly contributes to SEO by improving page loading speed, user experience and mobile optimization.

- Faster Page Load Times: Caching allows browsers and servers to store copies of your web pages. When a user revisits your site, the browser can load the page from the cache rather than retrieving it all over again from the server. This significantly reduces page load times, which is, as we’ve already mentioned a few times, a ranking factor.

- Enhanced User Experience: Users tend to prefer and engage more with websites that load quickly and smoothly. By reducing load times through caching, you improve the user experience – we’ve already explained the benefits of good UX for SEO above.

- Mobile Optimization: With the increasing prevalence of mobile browsing, where network speeds can be variable, caching plays a crucial role in ensuring your website loads quickly on mobile devices, aligning with Google’s mobile-first indexing approach.

✅ Ensure Core Web Vitals scores fall within the “Good” performance range.

As per Google Search Central:

Core Web Vitals is a set of metrics that measure real-world user experience for loading performance, interactivity, and visual stability of the page.

These metrics are:

- Largest Contentful Paint (LCP): Measures loading performance. To provide a good user experience, strive to have LCP occur within the first 2.5 seconds of the page starting to load.

- First Input Delay (FID): Measures interactivity. To provide a good user experience, strive to have an FID of less than 100 milliseconds. Starting March 2024, Interaction to Next Paint (INP) will replace FID as a Core Web Vital.

- Cumulative Layout Shift (CLS): Measures visual stability. To provide a good user experience, strive to have a CLS score of less than 0.1.

The Core Web Vitals report in Google Search Console shows you how your websites pages perform in terms of Core Web Vitals, based on real world usage data (sometimes called field data). Google uses a Red (Poor), Amber (Needs Improvement), Green (Good) ranking scale to rank your pages’ performance.

In 2021, Google began incorporating Core Web Vitals into it’s ranking algorithm meaning that if your site’s Core Web Vitals are mostly “Good” (Green) then you are likely to get a ranking boost.

By focusing on Core Web Vitals and optimizing your website accordingly, you not only improve SEO rankings but also provide a smoother, more enjoyable user experience, leading to increased user engagement and conversions. These metrics have become a crucial part of website optimization and should be considered in any SEO strategy.

✅ Consider Using A Content Delivery Network (CDN)

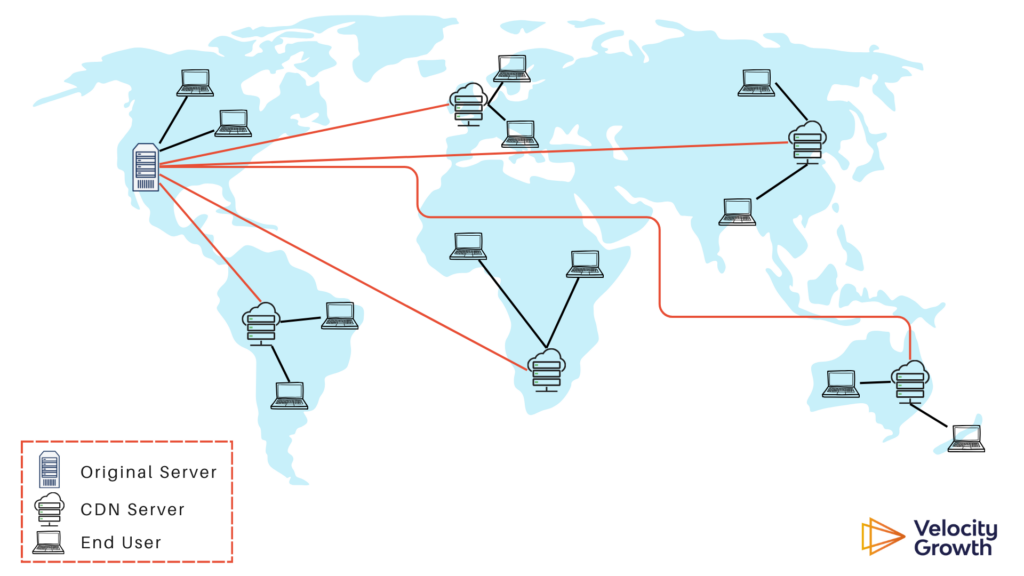

A Content Delivery Network (CDN) is a system of distributed servers that deliver web content to users based on their geographic location. Essentially, a CDN stores a cached version of your website content in multiple geographical locations, known as “Points of Presence” (PoPs). Each PoP contains a number of caching servers responsible for content delivery to visitors within its proximity.

There are multiple reasons to use a CDN. From an SEO perspective, A CDN can significantly enhance page loading speed and user experience no matter where in the world your users are, which, as we’ve already explained, has multiple positive knock-on impacts for SEO.

Using a CDN for static content like images and media files and even CSS and JavaScript files is a no-brainer. If, however, your site serves dynamic content which changes frequently or is user-specific, the use of a CDN may not be the best choice.

✅ Use HTTPS protocol for security

By implementing a security certificate, known as HTTPS (HyperText Transfer Protocol Secure), you create an encrypted link between the user’s browser and your website. This encryption ensures that all data transferred remains private and secure, making it nearly impossible for anyone to intercept and understand the information exchanged.

But the benefits of HTTPS extend beyond just security and privacy. Since 2014, Google has considered HTTPS as a ranking factor in its search algorithm. Not only that, Google has stated that it may issue manual actions or penalties for websites that collect sensitive user data without HTTPS.

Content

When search engines can crawl all your site, that doesn’t mean they understand who you are and what products / services you offer. On page content signals are one of the most important factors in Google’s algorithm, so it’s vital that you understand how to write and optimize site content.

You should optimize your on-page content to ensure that Google knows exactly what it’s about and increase your chances of ranking for relevant search queries. Here is how to do so:

✅ Carry Out Topic / Keyword Research

You need to be super clear on what product / service features, customer pain points and other topics are most important to your target audience before building your website. This is fundamental to a successful SEO plan.

At a very early stage it is important to carry out topic research* to identify all key topics for which core site content is required.

(*I use the term “topic” instead of “keyword” as one topic might share a bunch of very similar keywords e.g. “seo agency”, “search engine optimization agency”, “seo consultancy”, “seo company”, etc. – these are essentially all the same topic and would only require a single page on your website.)

There are many ways to go about topic research – the ultimate goal is to identify (groups of) keywords that are…

- Relevant to your product/service offering (Relevance)

- Likely to be searched for by your target market (Volume)

- Not so competitive that it will be impossible to rank (this comes down to authority vs competitors) (Difficulty)

… and then create content dedicated to those topics.

For a new website, your topic research process might be:

- Build your “Seed List” of topics that you want to rank. You might build this list through:

- Listing the obvious products and services you offer

- Listing key target audience pain points / problems that your product / service solves

- Use a tool like SEMrush to check what keywords drive the most traffic to your competitors website or what page topics attract the most traffic

- Review your list and assign “Relevance” scores

- Not all the keywords / topics that you will discover via Step 1 above will be relevant to your own core product / service offering.

- For example, you may find that a competitor is attracting a lot of traffic via search terms related to a product that you do not sell and have no plans to sell.

- Add in Search Volume and Google Ads Conversion data (if you’ve got it)

- Whilst search volume is certainly not the only factor that you should take into account when deciding on what topics to write about, it will help you to prioritize within a comprehensive list of topics. If you have two highly relevant topics, one of which has huge search demand e.g. 20,000 searches per month and another which has search demand of 10 searches per month, more often than not, the former will be higher priority.

- If you happen to have Google Ads campaigns running and can see that users tend to convert on your site when they search for a certain topic, you can assume that you have solid evidence that users searching for that term are likely converters. This will thus warrant at least a little bump in priority.

- Assign a priority score to each of your topics based on Relevance, Volume and Conversion Rate

- Depending on how important each of these factors is to you, you can calculate the priority of each keyword based on a weighted average of your Relevance, Volume and Conversion scores. Usually, a simple average of all three without any additional weighting will be fine.

✅ Create One single Page For Each Key Topic

Once you know what features, pain points and topics are of interest to your target audience, you must create a single dedicated page for each of them and ensure that these pages are integrated into a logical site structure. Without a well integrated, dedicated page per topic, you’re chances of ranking for searches relevant to that topic are very low, especially with a brand new website.

A web page should be laser focused on one specific topic e.g. a specific product or service. If you mix two or more different topics on a page it becomes incredibly difficult to optimise the page for all topics and ensure that it ranks in a high position for queries relevant to all those topics.

The topic of the given web page should be the focus of (in order of priority):

- your page title tag

- the opening 100 words of your content (and throughout)

- your meta description

- your image alt text tags

The fact that each single page has only one meta description, one page title and one URL is part of the reason that it is so difficult to rank well for multiple topics with a single page.

✅ Use Descriptive and Unique Title Tags for Each Page

Now that you have a page dedicated to each topic that you want to rank for, your title tag (and, to a lesser degree, meta description) for each page should be optimized towards that target topic. This indicates to Google that your page is relevant to searches related to that topic and it can encourage higher Click Thru Rates from the Google SERP.

✅ Make Sure to Use H1s, H2s, H3s Logically

Again, H1s, H2s, etc. should be optimized towards the main topic and related sub-topics of the page to improve ranking potential for relevant searches.

They should also be used to structure your content with a clear and logical structure – users don’t want to see long, unstructured, seemingly never-ending lines of text. Neither do search engines. Search engines love structure.

Subheadings and paragraphs are essential for making sure users and crawlers can easily scan, read, and understand your content. Subheadings should be used to introduce new sub-topics / sections and to breakdown content further within those sections. From an SEO perspective you should use the following “rules” as a guide:

- H1 should be used only once within your content – at the very start – and should include the title of your article. This title should incorporate keywords that are closely aligned with your core topic.

- H2s should be used frequently to break up content further. Each sub-topic that you or your agency have identified should ideally be incorporated into a H2, where logical (sometimes it will make more sense to use a H3)

- H3s should be used for further segmentation of content within H2 sections – think of them as subheadings within your sub-topic sections.

Expertise, Experience, Authoritativeness and Trust

Whilst all of the content tips above will ensure that your on the right track towards appearing in Google’s search results for relevant searches, they are no longer enough, in most cases, to be rewarded with the very top spots. This is thanks to Google’s introduction of the concept of “EEAT” into their ranking algorithm over the past number of years.

EEAT stands for Experience, Expertise, Authoritativeness, and Trustworthiness – thec concept is a key component of the guidelines used by Google to assess the quality of web content. Whilst EEAT is not technically a direct ranking factor (i.e. you there isn’t a specific EEAT metric that you can aim towards), Google’s Search Quality Guidelines focus heavily on EEAT. Google’s search quality raters review websites to determine whether they showcase experience, expertise, authoritativeness and trust and their feedback is fed into Google’s ranking models which in turn rank other websites that similarly showcase these traits.

A content piece’s level of EEAT is carefully considered by Google when deciding which content should rank highest, especially when it comes to YMYL content (Your Money, Your Life – e.g. content related to news, government / law financial advice, shopping info, medical advice, info on people, etc.). But what exactly is Google looking for?

- Experience: Refers to the practical knowledge or skill that a content creator has gained through direct involvement or participation in a topic. It acknowledges that firsthand experience can be a valuable form of expertise, especially in subjects where formal qualifications might not be as relevant.

- Expertise: Fefers to the depth of knowledge or skill in a specific field. In the context of web content, it means that the content should be created by individuals who are knowledgeable and well-informed about the subject matter. This can include formal education, professional experience and qualifications.

- Authoritativeness: Referes to the credibility of the content creator, the content itself, and the website where the content is published. Authoritativeness is established through the reputation of the content creator, the quality of the information provided, and the recognition of both by peers and other authoritative sources in the field.

- Trustworthiness: Refers to the reliability and honesty of the content, the content creator, and the website. It involves aspects like accuracy, transparency of authorship, clear distinction between sponsored and non-sponsored content, and the secure handling of user data (especially important for e-commerce sites).

If you’re creating your website from scratch, you’re at the perfect point to build it with EEAT in mind. Follow these tips during the planning and build phase and onwards into your ongoing content creation:

✅ Create Unique, Valuable, Relevant Content

Google’s goal is to rank content that is genuinely helpful to users. Content that showcases the authors expertise or experience relative to the topic will be rewarded. Your site content should be relevant to your audiences pain points and problems and it should demonstrate in-depth knowledge or skill in your field. Cookie-cutter content that’s just the same as everyone else’s simply won’t work, why would it?

✅ Ensure Content Creators Have Relevant Qualifications / Experience

As we have discussed, Google’s goal is to rank content from authors that have genuine expertise or experience relative to the topic they are writing about. Ensuring your content writers are experts in their field is the first crucial step in showcasing content EEAT to Google.

If you don’t have the internal SME resources to write expert driven content, consider outsourcing content creation to genuine experts or alternatively getting well researched content written by non experts and then having that content verified by genuine experts.

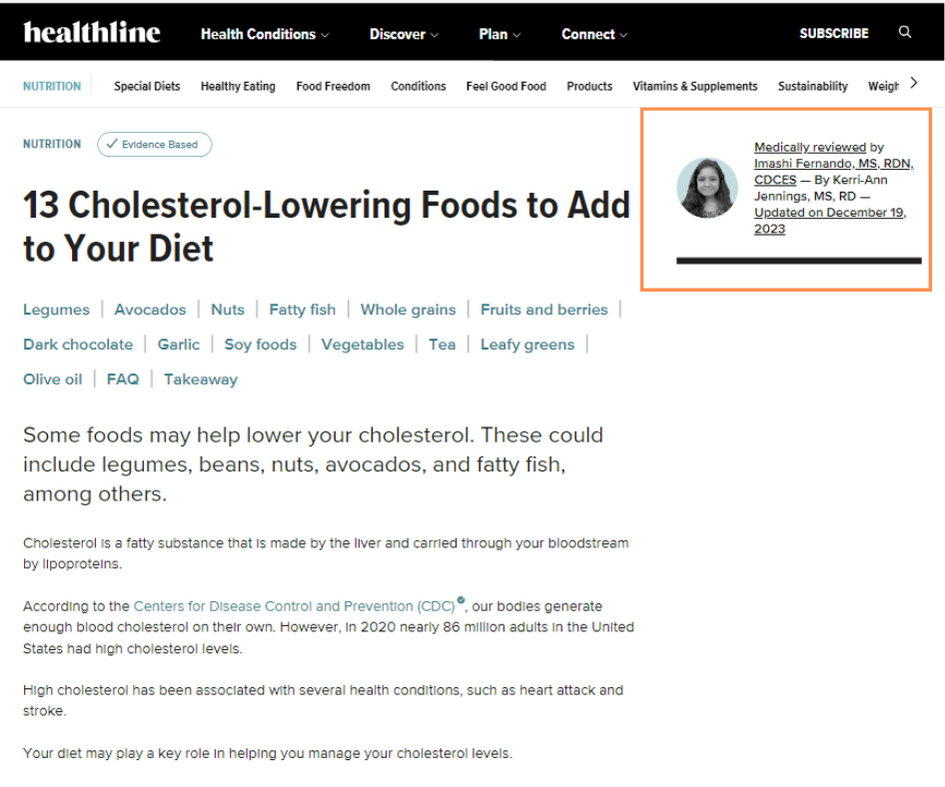

In the example below from Healthline, you can see that the content has been medically reviewed and verified by a subject matter expert, in this case a dietitan nutritionist.

✅ Include Author Name / Bios in Blog Posts

It’s one thing for us to know our content is written or verified by subject matter experts. It’s another thing making sure that Google understands this. The most fundamental signal for Google in this regard is a visiblie author name or bio on every blog post.

✅ Link Author Bios to Author Pages

Your author bio on each blog post should link off to an author page with more details on the author, ideally including outbound links to other profiles online and internal links to all the posts that the expert has written or verified. This is a further signal to Google that this person is a genuine expert.

✅ Include Article and Author Schema.org Markup on Blog Posts

Article structured data on your article pages can help Google understand more about the content and show better title text, images, and date information for the article in search results on Google Search and other properties. Embedding detailed author structured data within this article structured data can provide an additional boost to the credibility of the content and impact EEAT positively.

Implementing Schema.org markup, specifically for articles and author information, is a pivotal strategy in showcasing your website’s alignment with EEAT principles. Schema.org is a collaborative project that provides a collection of shared vocabularies webmasters can use to mark up their pages in ways that can be understood by major search engines. This markup helps search engines understand the content and context of your website better, which can significantly impact your SEO.

- Article Schema Markup: By incorporating ‘Article’ schema markup in your blog posts, you provide search engines with explicit metadata about the content of your posts. This metadata can include the title of the article, a brief description, the author’s name, the date published, and the article body. When search engines have this detailed information, they can display your content more effectively in search results, potentially increasing visibility and click-through rates. The Article schema also helps search engines understand the subject and quality of your content, which is crucial for EEAT.

- Detailed Author Schema Markup: Adding additional ‘Person’ schema to your blog posts adds another layer of credibility and trustworthiness to your content. This markup should include detailed information about the author, such as their name, a short biography, their area of expertise, and any relevant credentials or accolades that establish their authority in the field. By clearly linking the author to their expertise, you help search engines understand the authority behind the content, directly feeding into the EEAT criteria.

To implement this markup you can use tools like a WordPress Plugin like All in One SEO or manually with the help of Google’s Structured Data Markup Helper. After adding the appropriate schema to your blog posts, you can test it using Google’s Rich Results Test tool to ensure it’s correctly recognized by Google. Remember, while implementing schema markup requires some technical know-how, the SEO and credibility benefits it brings can be substantial.

✅ Clearly Distinguish Sponsored Content From Editorial Content

distinguishing sponsored content and advertisements from editorial content is important because it builds trust with users and showcases to Google that you are a genuine, reputable business. If sponsored content is not clearly marked, it can be perceived as deceptive, leading to a loss of trust both from users and search engines. This can negatively impact a site’s search rankings.

✅ Feature Customer Reviews and Testimonials

Featuring customer reviews and testimonials on your website is a good idea because they showcase real-world evidence of your business’s trustworthiness and authority. Such feedback from customers provides social proof, affirming the quality and reliability of your products or services, in turn helping to build credibility with both potential customers and search engines.

✅ Set up a Google Business Profile

A Google Business Profile is a free tool provided by Google that allows business owners to manage how their business information is displayed across Google services, including Search and Maps. By creating and optimizing a Google Business Profile, businesses can increase their visibility online. It can also assist in showcasing to Google that you are a legitimate business as well as having other potentially positive indirect impacts on EEAT.

✅ Submit Your Domain to Relevant Directories

By submitting your domain to reputable online directories that are relevant to your business type and location, you are showcasing to Google that you are a legitimate business and you are accumulating relevant backlinks to your site which further boost your domain’s authority in Google’s eyes.

✅ Build Links From Other Websites in Your Network

Again, accumulating relevant backlinks to your site will boost your domain’s authority in Google’s eyes

Links from other trusted websites indicate to search engines that your website is an authoritative / trusted source. At it’s most basic level, Google sees links from other websites as votes of confidence in your content and your website as a whole.

In the early day’s, Google had a pretty uncomplicated means of calculating the authority of a website – the more links that pointed to your website, the higher authority Google perceived you to have, the better your content ranked. That all changed, however, in 2012 with the rollout of Google’s “Penguin” update which adjusted Google’s algorithm to take into account the trustworthiness and relevance of links, rather than just the amount of links pointing to your website.

Ultimately though, by building links from other trusted websites, your site will be seen as trustworthy and search engines will rank your content higher in search results for relevant queries. It may also have the effect of increasing the frequency at which Google crawls your website.

✅ Build a Presence on Social Media

Creating and actively maintaining social media profiles allows you to demonstrate your expertise, build authority, foster trust, engage with your audience, and reinforce your brand identity. All of these elements align with EEAT principles, which are important not only for social media success but also for your overall online reputation and SEO performance.

✅ Include Sharing Buttons on Blog Content

By making it easy for users to share your content you may encourage wider discusssions and engagement and may also indirectly encourage more inbound links by making your content available to a wider audience. This all serves to strengthen your online reputation and credibility within your industry or niche.

✅ Showcase Awards and Endorsements

Similar to customer reviews and testimonials, showcasing awards and endorsements can serve as tangible evidence of your expertise, authority, and trustworthiness, making your website and content more credible and appealing to your audience.

✅ Make Privacy Policy and Terms of Service Comprehensive

One item that often goes under the radar is a bespoke privacy policy and terms of service – this can build trust with users and showcase to Google that you are a genuine, reputable business.

✅ Clearly Display Contact Information

By showcasing your contact information e.g. name, address and phone number on your website and other online profiles, you build trust with users and showcase to Google that you are a genuine, reputable business.

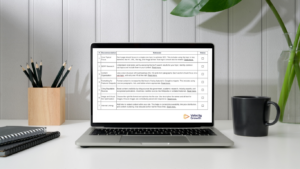

Download the New Website SEO Checklist

We’ve collated these tips into a handy downloadable checklist. Download it here: Velocity Growth – New Website SEO-Readiness Checklist

Or if you’ve got any SEO questions, feel free to get in touch.

Darren McManus is an accomplished SEO Lead at Velocity Growth, a leading digital marketing agency. With over seven years of experience working agency-side, Darren has established himself as an expert in the field of SEO. Based in Galway, Ireland, he has developed bespoke SEO roadmaps and implemented long-term, award-winning SEO strategies for clients spanning various industries.

Darren possesses in-depth knowledge and experience across all areas of SEO, including technical optimization, on-site optimization, and off-site optimization. His comprehensive understanding of search engine optimization techniques enables him to craft effective strategies that significantly enhance organic visibility, drive website traffic, and generate conversions for his clients.

His expertise in SEO has been instrumental in helping his clients achieve their digital marketing goals and grow their businesses online. His track record of success demonstrates his ability to deliver tangible results in highly competitive markets.